LLM Farm

Artem Savkin

4.3

AD

Detalhes da Versão

| País do Editor | US |

| Data de Lançamento no País | 2023-12-13 |

| Categorias | Developer Tools |

| Países / Regiões | US |

| Website do Desenvolvedor | Artem Savkin |

| URL de Suporte | Artem Savkin |

| Classificação do Conteúdo | 4+ |

AD

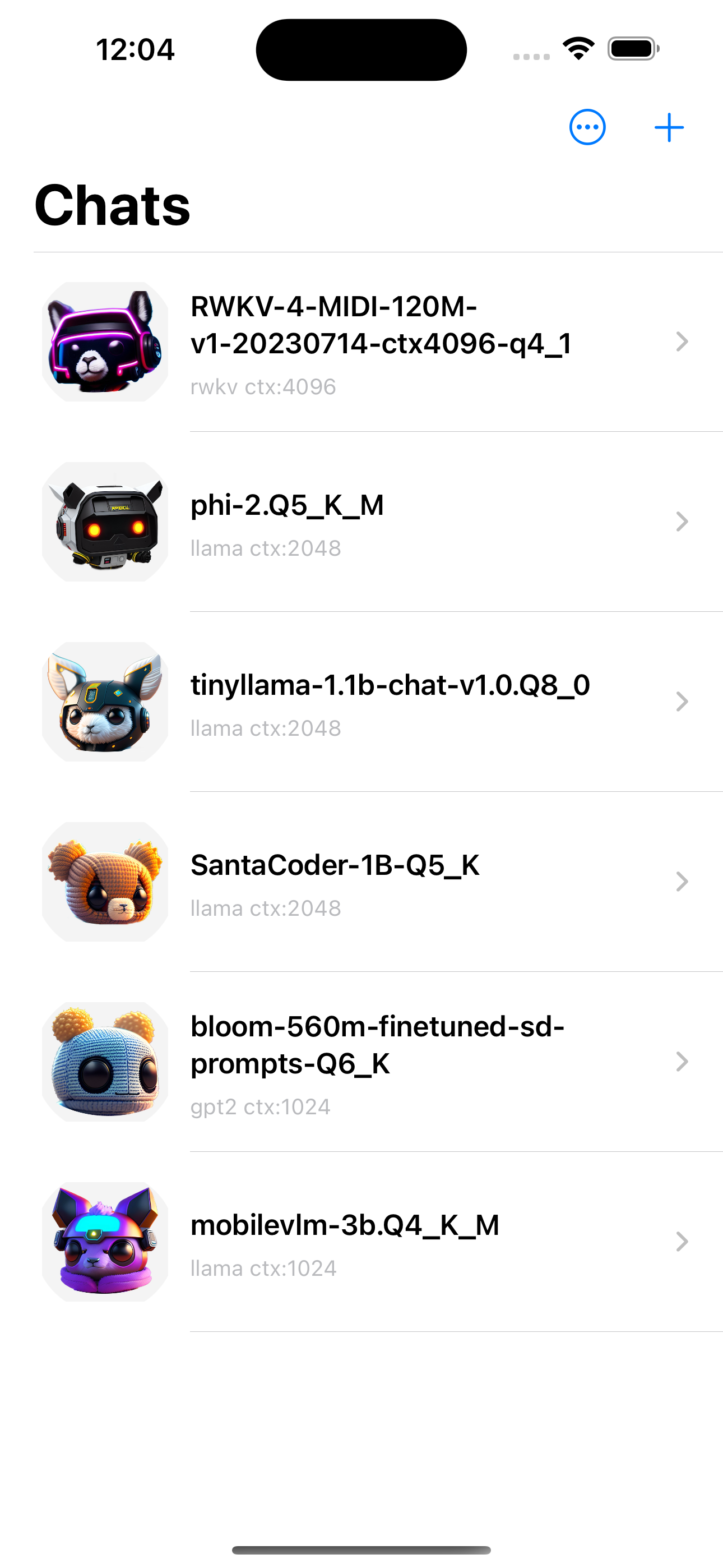

LLMFarm is an iOS and MacOS app to work with large language models (LLM). It allows you to load different LLMs with certain parameters.

# Features

* Various inferences

* Various sampling methods

* Metal

* Model setting templates

# Inferences

* LLaMA

* GPTNeoX

* Replit

* GPT2 + Cerebras

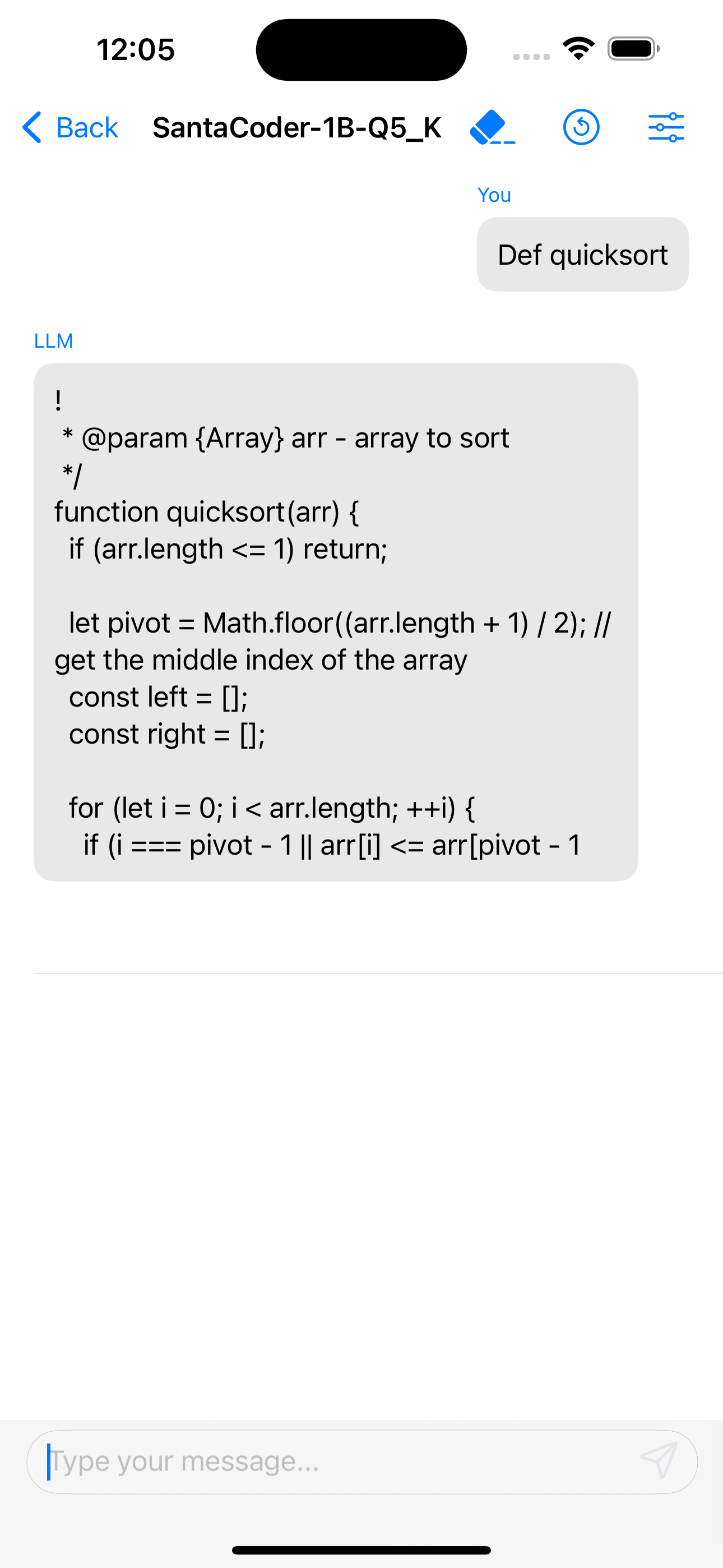

* Starcoder(Santacoder)

* RWKV

* Falcon

* MPT

* Bloom

* StableLM-3b-4e1t

* Qwen

* Gemma

* Phi

* Mamba

* Others

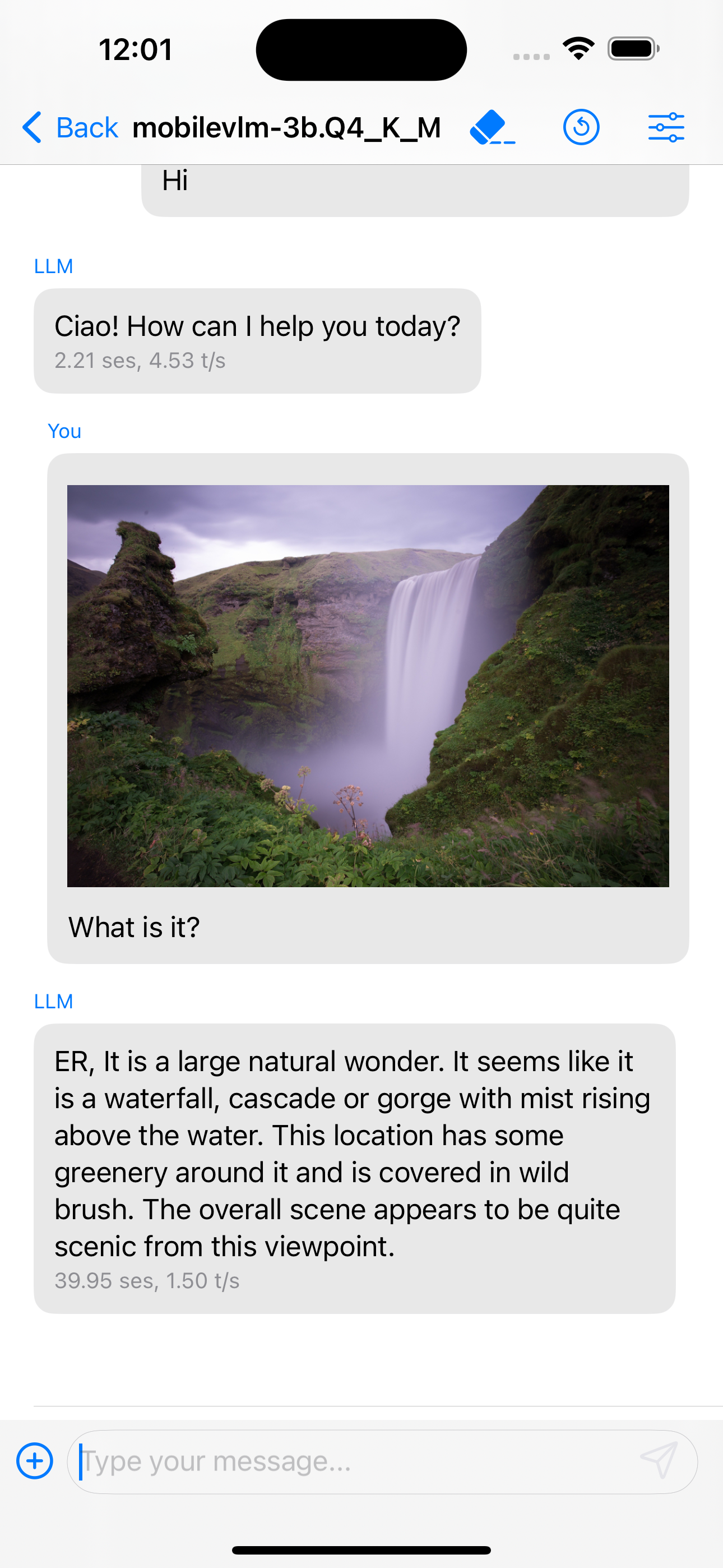

# Multimodal

* LLaVA 1.5 models

* Obsidian

* Bunny

* MobileVLM 1.7B/3B models

Note: For Falcon, Alpaca, GPT4All, Chinese LLaMA / Alpaca and Chinese LLaMA-2 / Alpaca-2, Vigogne (French), Vicuna, Koala, OpenBuddy (Multilingual), Pygmalion/Metharme, WizardLM, Baichuan 1 & 2 + derivations, Aquila 1 & 2, Mistral AI v0.1, Refact, Persimmon 8B, MPT, Bloom select llama inferece in model settings.

Sampling methods

* Temperature (temp, tok-k, top-p)

* Locally Typical Sampling

* Mirostat

* Greedy

* Grammar

Download LLM Farm

Not Available

Avaliação Média

57

Desagregação das Avaliações

Avaliações em Destaque

Por Not important man

2024-08-10

Versão

I think it's overall pretty good! However, I'm not a fan of the animal icons for every conversation. I believe using regular icons or even having the option to have no icon at all would attract more users to the app. Additionally, it would be great to have a "new conversation" button in the menu instead of creating a duplicate conversation each time which just made the title become longer and longer Also, maybe using AI to generate titles like ChatGPT does is great. Lastly, it would be awesome if the markdown format could be displayed during the generation process instead of after it's done. that would be awesome 👍 Anyway, thank you very much for this app!

Por Feeling defrauded

2024-02-21

Versão

This app looks pretty promising, but it’s a little bit daunting to someone who’s not as familiar with setting up LLMs. For example, how do you download the LLMs and where do you go to get them? Which alarms are likely to work? It might be a good idea to include specific LLMs that have been tested on which devices. Some sort of tutorial or instructions would be really useful.

Por climboy

2024-08-20

Versão

offline_functionalityReally like this app after trying it out. I can see in the preview images that can send pictures to llm, but I couldn't find the plus sign at the bottom left corner on my own phone. How does it works? The download of models will be terminated when the phone screen is off. It would be even more perfect if it could run in the background and share computing power services with other users on the local network through an API. Very good!

Capturas de Tela

Apps Populares